Since antiquity, statistics has been a cornerstone of understanding the world around us. From Pythagoras to modern-day data science, there’s one element that remains paramount: parameters. Parameters are vital in any statistical analysis and can make or break an experiment’s success. In this article, we’ll explore the importance of parameters in statistics, uncovering why they are so essential for accurate results.

As someone once said, “A journey of a thousand miles begins with a single step.” To understand the role of parameters in statistics, it helps to first look at its foundations: how does statistical theory apply? Statistics is essentially about finding patterns and relationships between variables; by plotting these points on a graph you can draw conclusions about trends and correlations. This is all made possible through combining two key ideas: probability and inference. Probability tells us what is likely to happen given certain conditions, while inference allows us to generalize from those observations and make predictions.

Parameters play an important role in both probabilistic models and inferential reasoning processes – they provide structure to our analyses by controlling the range of values used to calculate probabilities and infer meaning. With proper parameterization, you can get more precise measurements which give insight into underlying trends within your dataset. So let’s delve deeper into what exactly parameters do when analyzing stats – their impact on calculations as well as implications on accuracy – so you too can unlock the power of parameters in your own research!

What Are Parameters?

It’s almost ironic that when it comes to understanding parameters in statistics, the first question to be asked is ‘what are parameters?’. Parameters have been used to represent population characteristics and measure them for a long time. In fact, they’ve become so essential to inferential statistics that their importance can’t be overstated.

Parameters allow us to compare samples with populations by evaluating certain characteristics of the sample size or even entire data sets. They help us determine if the given sample follows a normal distribution and identify its central tendency using measures like mean and variance. This also helps us gauge how much variation there is between random samples taken from the same population – something we call standard deviation which is usually measured by taking the square root of variance.

In summary, parameters play an important role in inferential statistics as they provide insight into population characteristics through comparison of various samples taken from it. Without them, it would be impossible to make valid assumptions about our data sets and draw accurate conclusions about the underlying population.

Types Of Parameters

Knowing the different types of parameters and how they are used can be like discovering a hidden treasure – it opens up an entirely new world of understanding and insight. In statistics, there are two main kinds of parameters: descriptive statistics and common parameters.

Descriptive Statistics help to summarize a dataset by providing numerical characteristics such as range and mean. Common Parameters come from probability distributions that describe the likelihood of certain outcomes occurring in sampling error. They provide us with insights into future trends by looking at past data. Statistical inference helps us draw conclusions about populations based on what we learn from sample datasets.

The range of values associated with each type of parameter is important when analyzing data because it gives us a good idea about how much variation exists within the sample population or between different samples. By looking at both descriptive statistics and common parameters, we can gain valuable information about our data sets so that we can make informed decisions moving forward.

Examples Of Parameters In Statistics

Some may argue that examples of parameters in statistics are too abstract to understand. On the contrary, understanding how and why parameters exist is essential for any statistical analysis.

Parameters such as population size serve as a basis for all statistical calculations. For example, if you want to generate an unbiased estimate from a random sample, the population size needs to be known so that it can influence the binomial distributions and sample variance. Similarly, when determining the standard error of a representative sample, having knowledge about parameters such as population size is important. By being able to identify these parameters and their purpose in statistics, one can gain deeper insights into how to interpret data correctly.

In addition to this, other types of parameters also exist which complement each other in order to create more accurate results. These include confidence intervals, degree of freedom, t-value and F-ratio among others. Understanding these concepts and their applications will allow one to better assess what type of data they have available and draw appropriate conclusions from it.

Statistical Notations

Statistical notations are essential for understanding the role of parameters in statistics. Categorical variables can be represented with Greek letters such as alpha (α) and beta (β), while continuous variables (such as measurements) can be denoted by x. In this context, a parameter is an unknown value used to represent population characteristics that must be estimated using sample data. For example, when plotting two-dimensional graphs, the parameter in statistics will usually appear on the X axis variable while the Y axis variable represents its parameter estimate.

When determining parameters from a given statistical problem, it’s important to choose appropriate notation so that readers understand what type of variable has been selected. This helps eliminate confusion and ensure accuracy when interpreting results. By carefully selecting clear and concise notation to represent each type of variable – categorical or continuous – researchers can accurately communicate their findings about the role of parameters in statistics.

Estimation Of Parameters

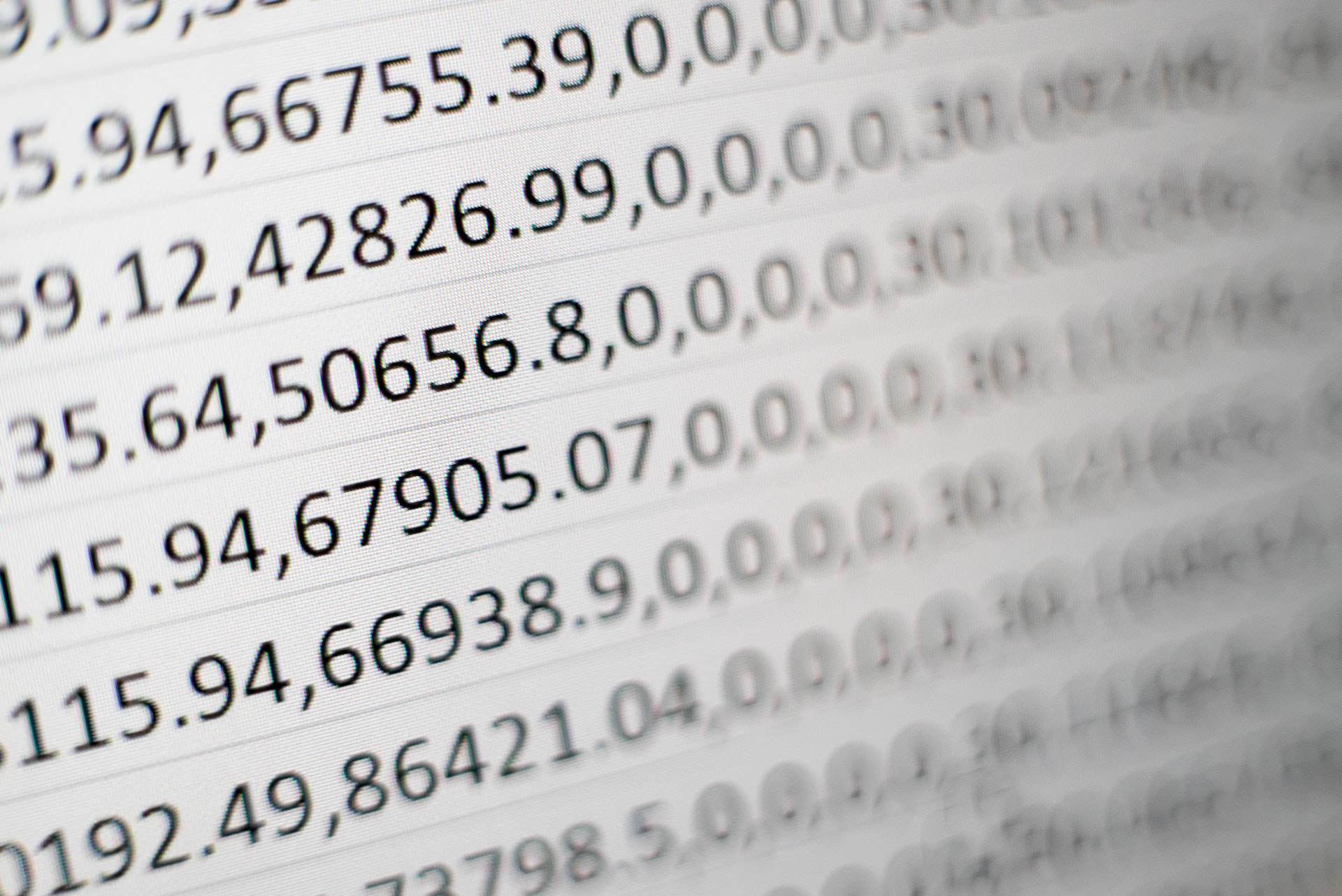

“A guess is as good as a miss”, thus estimation of parameters plays an important role in statistics. Parameters can be estimated by measuring frequency distributions, sample standard deviation and interquartile range over certain time periods. For instance, if the body weight of a group of people is to be analysed then the average weight or median income can serve as its parameter.

The frequency distribution will indicate how many individuals fall within each specified interval while the sample standard gives information on the spread of data points around the mean value. The interquartile range helps determine whether there are outliers among different groups and provides insight into what type of data could be expected during further analysis. The combination of these three measurements can help accurately estimate parameters for any given set of data.

By understanding how to use these techniques to measure and analyse various types of data effectively, it is possible to get meaningful insights from statistical experiments and make more informed decisions.

Parameter Estimation Methods

Parameters play a crucial role in statistics, both in the estimation of parameters and their selection for models. Estimating parameters is an important part of understanding sample statistics, as it allows us to infer what the true population parameter might be. When selecting parameters for our model, we need to consider the baseline characteristics that are present in our sample data such as size and quality of data sets. This process helps us choose which variables should go into our model.

There are several common types of parameter estimation methods used when working with sample statistics. Maximum Likelihood Estimation (MLE) is one popular method used to estimate unknown parameters in a statistical model based on observed data points. Another type of parameter estimation technique is Bayesian inference. This approach uses prior knowledge and beliefs about the system being studied to build probability distributions over possible values of unknown parameters given the observed data points. Finally, another popular technique is called least squares regression, which estimates unknowns by minimizing the sum of squared residuals between observed values and predicted values from a linear equation or function.

These different techniques allow statisticians to accurately assess how well certain parameters fit into their models and make informed decisions about which ones will lead to better results. As such, properly estimating and selecting appropriate parameters can have immense implications for any modeling project’s success or failure.

Difference Between A Parameter And A Statistic

As a statistician, it is important to understand the difference between a parameter and a statistic. Parameters are educated guesses that describe an underlying population using letters such as µ (mu) or σ (sigma). This means they can only be used after data has been collected from the entire population. Statistics, on the other hand, use actual values with letters like m or s – these are results of experiments and surveys taken from samples of populations.

For example, consider binomial error structure: often times studies have skewed distributions where there is a percentage of studies which show much higher rates than expected in a theoretical distribution. In this case, parameters would be useful for describing the overall effect of the experiment; statistics will provide more specific information about individual observations within each sample group.

Using both parameters and statistics helps us to make better-informed decisions based on our understanding of the population at large and how individuals interact with one another. By taking into account both pieces of data, we can create accurate models for predicting future outcomes.

Difference Between Parameters And Variables

When asking questions about parameters in statistics, it’s important to understand the difference between a parameter and a variable. Parameters are estimates of population characteristics which can be used to answer questions such as ‘What is the average age?’ or ‘How many people live in this city?’. Variables on the other hand are things which can be measured. In terms of variables for parameters, they are two types: scale variables – like height, weight, income – that measure differences along an interval; and response set variables – like gender identity, political affiliation – that measure qualitative responses.

In addition to these types of parameters and variables, other additional parameters may be measured through theoretical distributions for data sets rather than true distributions. For example, when measuring a certain outcome across several samples one might use a theoretical distribution (e.g., normal) instead of the actual values obtained from each sample group. This allows researchers to better compare results across groups and make more accurate predictions about future outcomes based on current measurements. Furthermore, because theoretical distributions allow users to view higher-level patterns in their data, they provide valuable insight into areas beyond those specifically being studied with traditional methods.

Overall, understanding the difference between parameters and variables is essential for any researcher looking to analyze complex datasets accurately. By using both empirical approaches such as true distributions and theoretical approaches such as scales or response sets variables, researchers can gain deeper insights into how different factors interact with one another and how specific changes may impact overall trends.

Conclusion

In a world driven by numbers, it is often hard to believe that parameters and variables have anything to do with each other. After all, aren’t they just two sides of the same coin? Well, not quite as we’ve just seen!

Parameters refer to characteristics or attributes that describe a population as a whole. They include things like mean, variance and standard deviation which provide us with useful information about the data set being studied. Estimation methods allow us to estimate these values from a sample size so we can draw conclusions about larger populations. It’s also important to understand the difference between parameters and statistics – while both measure certain aspects of a population, statistics use sample data whereas parameters use entire populations for measurement purposes.

From understanding the basics of types of interval estimates used for reporting purposes and advances in methods such as percentage base or percentages of responses, one can come to appreciate how different parameters and variables actually are. Knowing which type of estimate serves what purpose gives us the ability to infer knowledge about much larger systems than we could otherwise accomplish on our own. As Albert Einstein once said: “Everything should be made as simple as possible but not simpler” – this certainly applies when it comes to working with parameters in statistics!